ConvNet vs Transformer, Supervised vs CLIP:

Beyond ImageNet Accuracy

Kirill Vishniakov¹ Zhiqiang Shen¹ Zhuang Liu²

Abstract

Modern computer vision offers a great variety of models to practitioners, and selecting a model from multiple options for specific applications can be challenging. Conventionally, competing model architectures and training protocols are compared by their classification accuracy on ImageNet. However, this single metric does not fully capture performance nuances critical for specialized tasks. In this work, we conduct an in-depth comparative analysis of model behaviors beyond ImageNet accuracy, for both ConvNet and Vision Transformer architectures, each across supervised and CLIP training paradigms. Although our selected models have similar ImageNet accuracies and compute requirements, we find that they differ in many other aspects: types of mistakes, output calibration, transferability, and feature invariance, among others. This diversity in model characteristics, not captured by traditional metrics, highlights the need for more nuanced analysis when choosing among different models.

Models are often compared only by their ImageNet accuracy, ignoring many other aspects of their behaviors. Our study shows models with similar ImageNet performance can have vastly different properties.

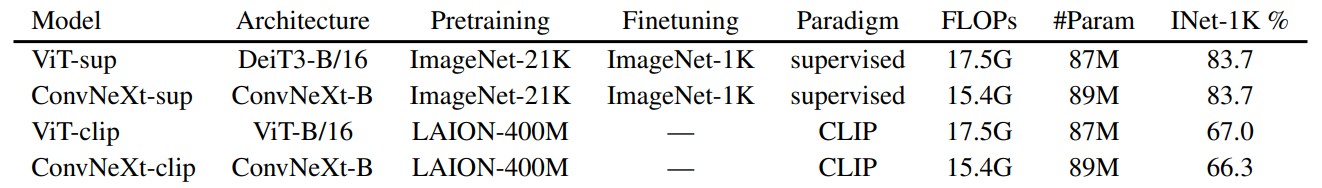

Models

To examine the impact of architecture and training objective on model performance, we compare Vision Transformer (ViT) with ConvNeXt, both modern architectures with comparable ImageNet-1K validation accuracies and computational requirements. Our study contrasts supervised models, represented by DeiT3-Base/16 and ConvNeXt-Base, and vision encoders of CLIP-based models from OpenCLIP.

Property Analysis

Our analysis is designed to investigate model behaviors that can be evaluated without the need for further training or finetuning. This approach is particularly relevant for practitioners with limited computational resources, who often depend on pretrained models. While we recognize the value of downstream tasks like object detection, our focus is on properties that offer insights with minimal computational demands and reflect behaviors important for real-world applications.

Model Mistakes

ImageNet-X is a dataset that extends ImageNet-1K with detailed human annotations for 16 factors of variation, enabling an in-depth analysis of model mistakes in image classification. It employs an error ratio metric (lower is better) to quantify model performance on specific factors relative to overall accuracy, allowing for a nuanced analysis of model mistakes. ImageNet-X results illustrate that:

- CLIP models make fewer mistakes relative to their ImageNet accuracy than supervised.

- All models suffer mostly from complex factors like occlusion.

- Texture is the most challenging factor for all models.

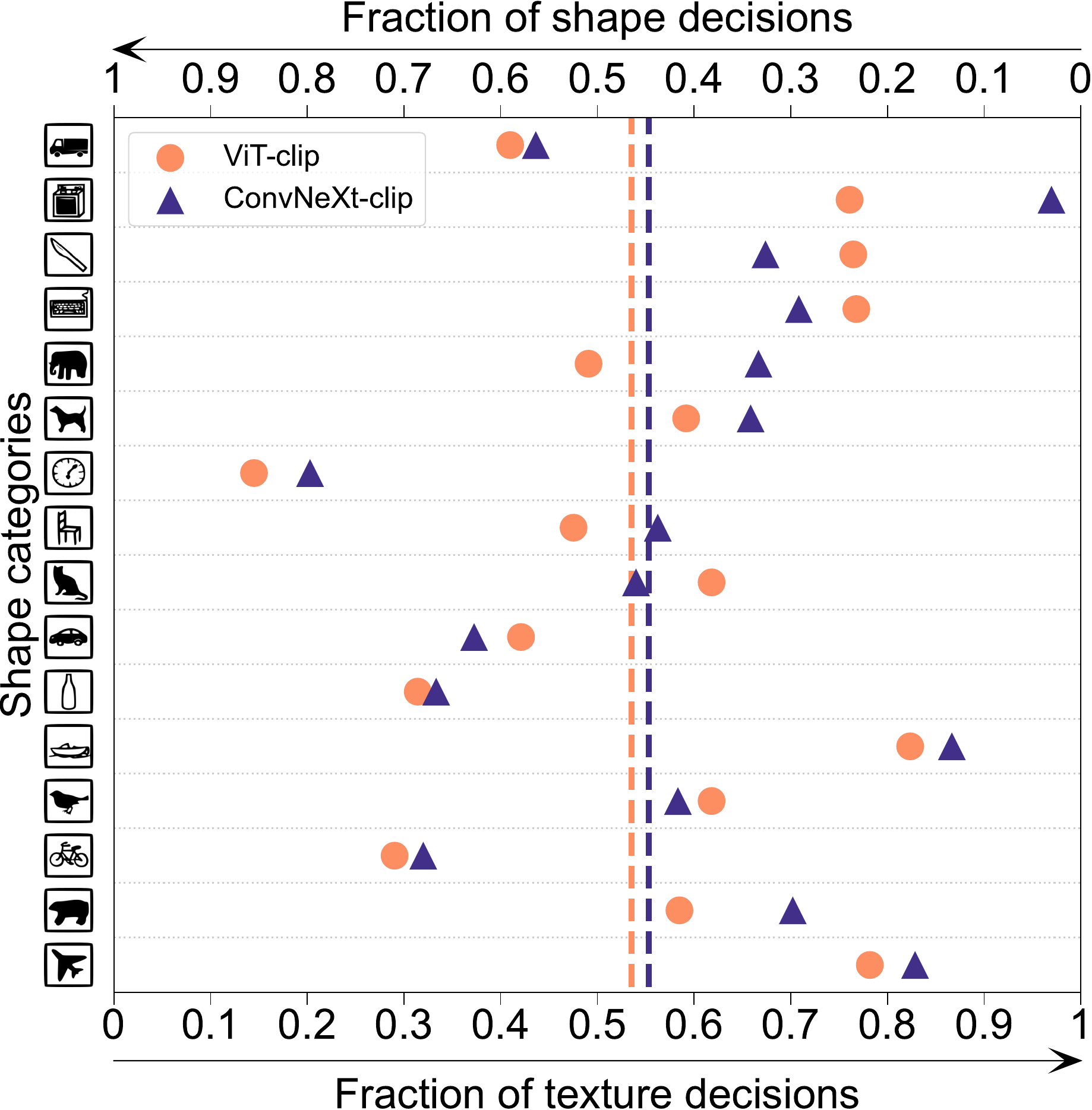

Shape / Texture Bias

Shape-texture bias examines if models rely on brittle texture shortcuts rather than high-level shape cues. This bias can be studied using cue-conflict images that combine shapes and textures from different classes. This approach helps to understand how much the model’s decisions are based on shape compared to texture. We evaluate shape-texture bias on cue-conflict dataset, observing that CLIP models have smaller texture bias than supervised and that ViT models have higher shape bias than ConvNets.

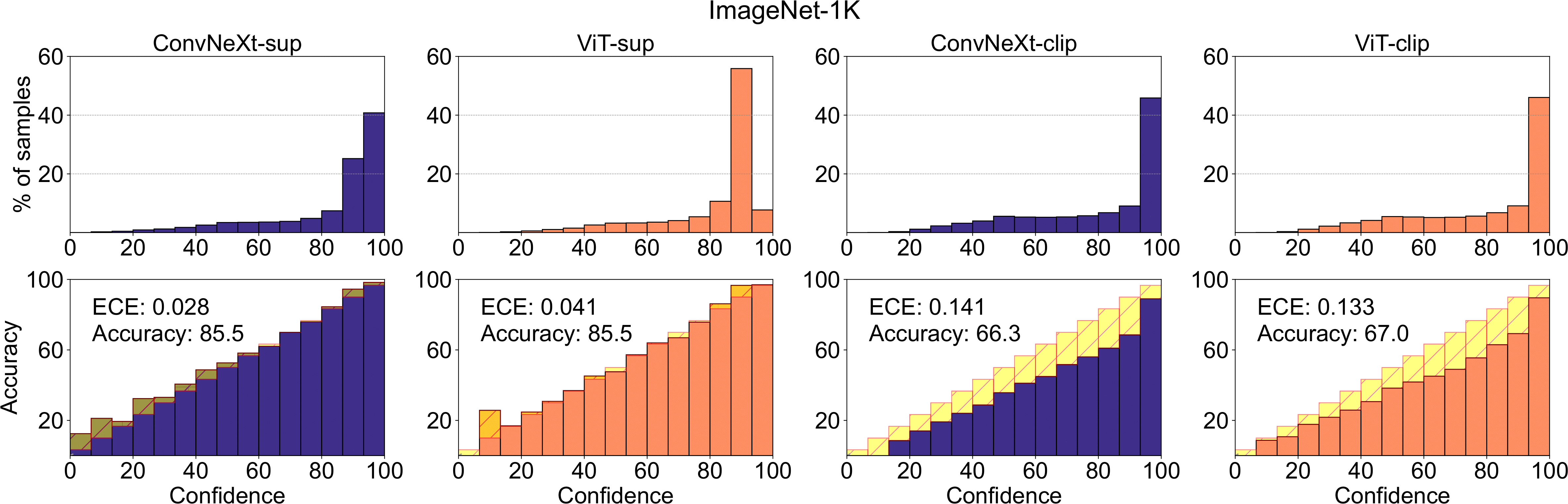

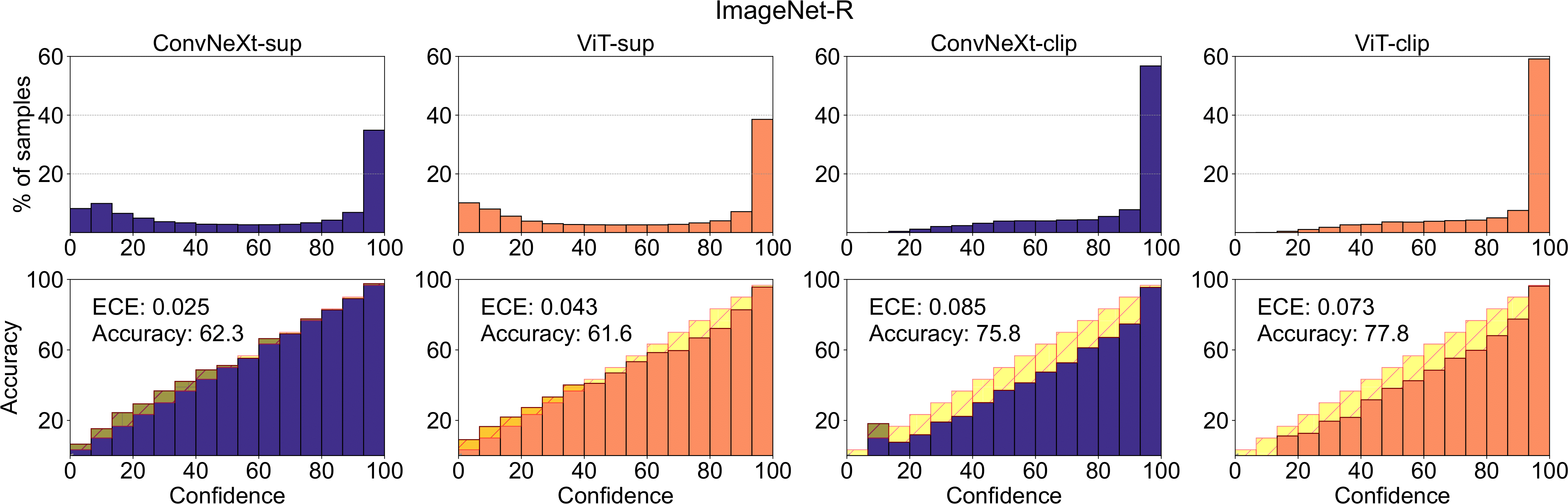

Model Calibration

Calibration quantifies whether a model’s predicted confidence aligns with its actual accuracy. This is assessed through metrics such as Expected Calibration Error (ECE) and visual tools including reliability diagrams and confidence histograms. We evaluate calibration on ImageNet-1K and ImageNet-R, dividing predictions into 15 bins. In our experiments we observe the following:

- CLIP models are overconfident and supervised models are slightly underconfident.

- Supervised ConvNeXt is better calibrated than supervised ViT.

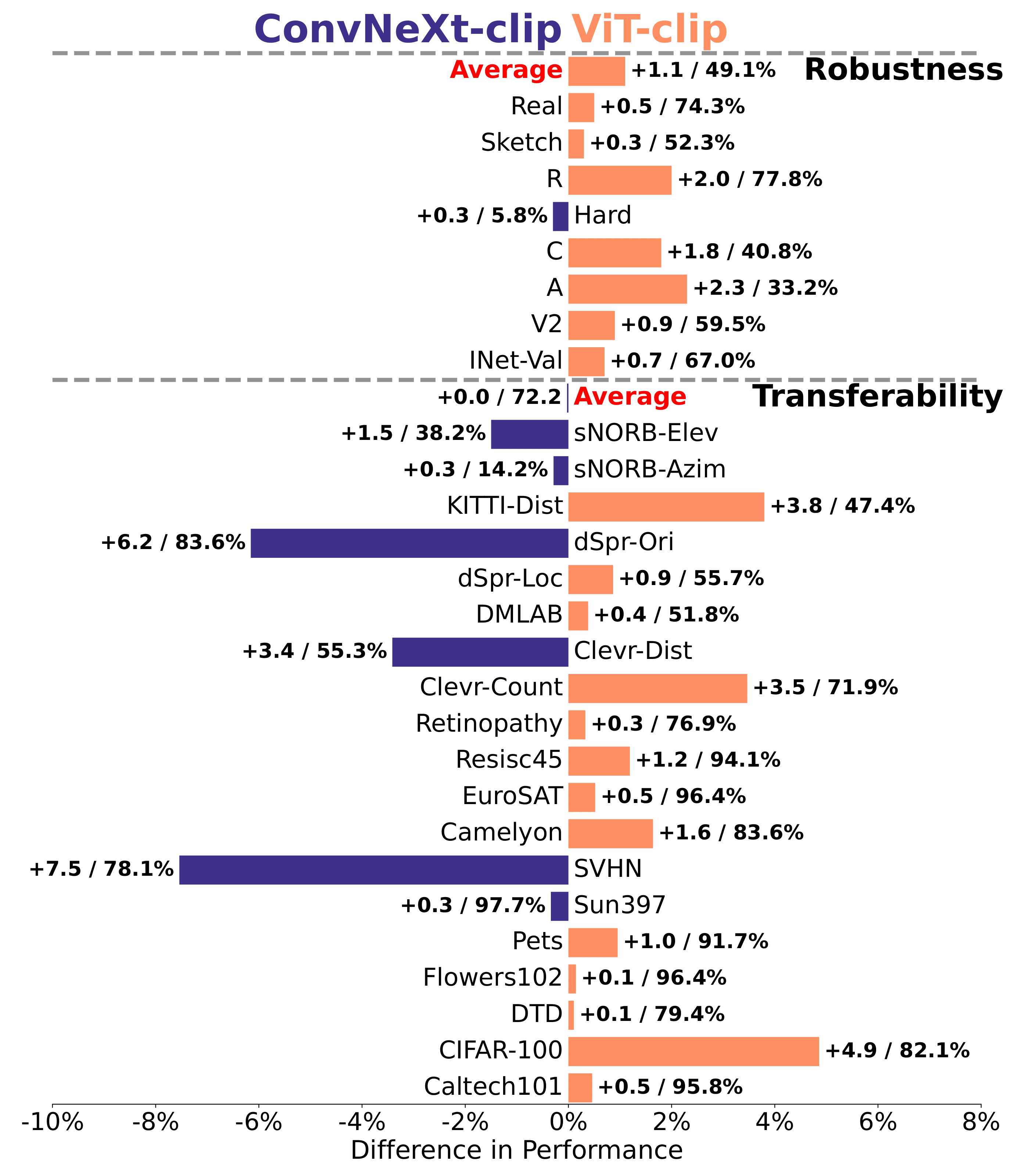

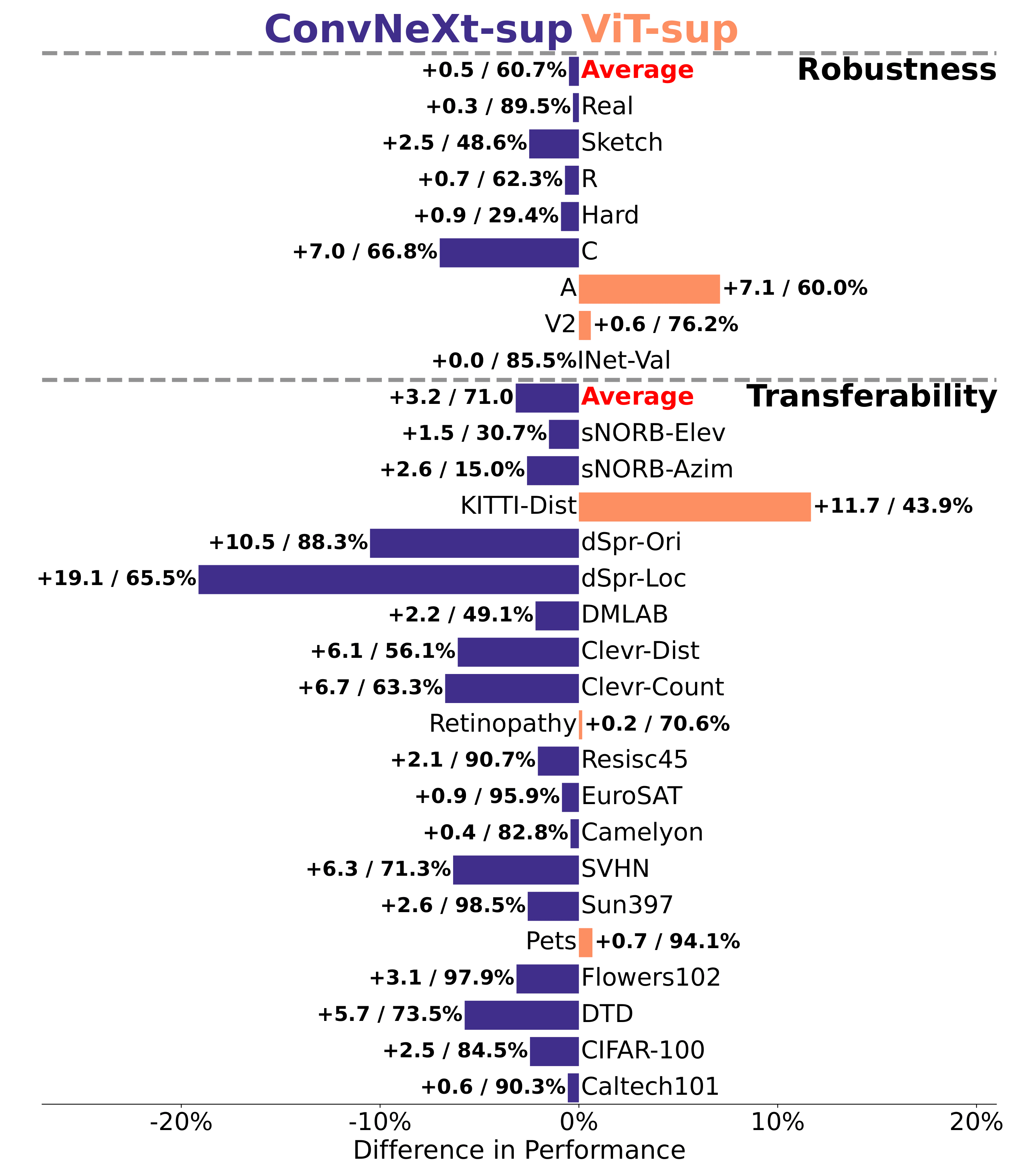

Robustness & Transferability

Model robustness and transferability are essential for adapting to data distribution shifts and new tasks. We evaluated robustness using various ImageNet variants and found that while ViT and ConvNeXt models have comparable average performances, supervised models generally outperformed CLIP on robustness except for ImageNet-R and ImageNet-Sketch. In terms of transferability, evaluated using the VTAB benchmark with 19 datasets, supervised ConvNeXt was superior to ViT almost matching the performance of CLIP models.

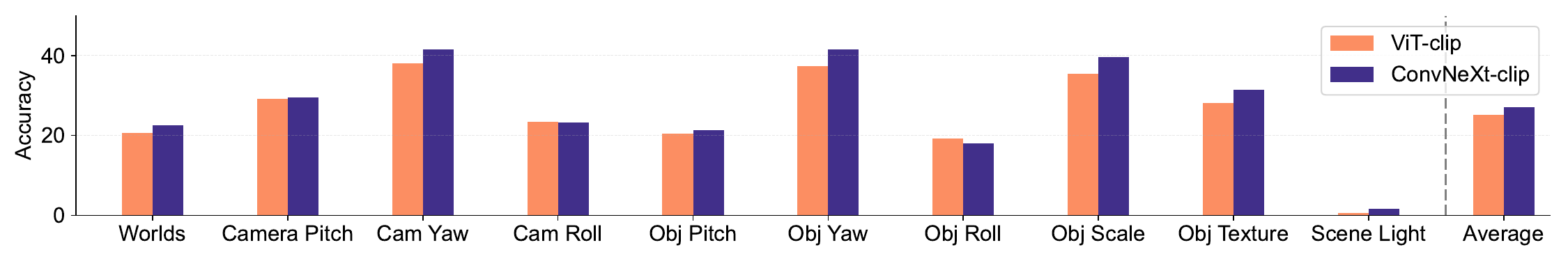

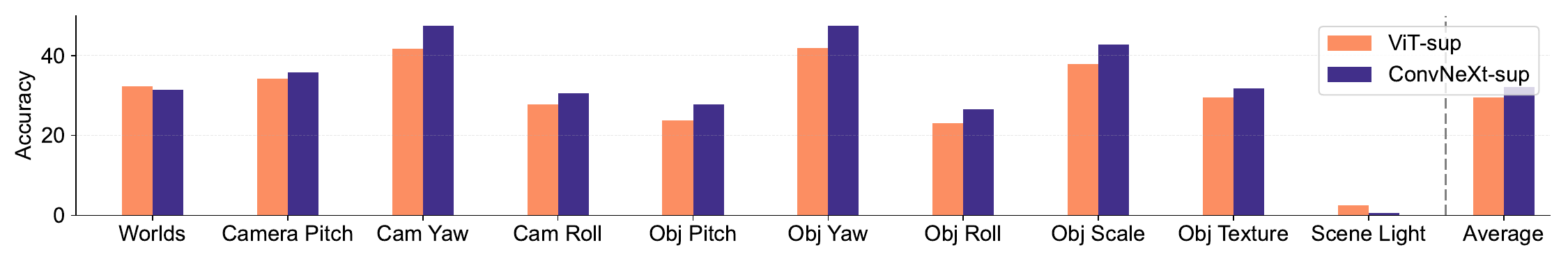

Synthetic Data

Synthetic datasets like PUG-ImageNet allow precise control over factors like camera angles and textures, emerging as a promising research avenue, so we analyze model performance on synthetic data. PUG-ImageNet contains photorealistic ImageNet images with systematic variation in factors like pose and lighting, with performance measured by absolute top-1 accuracy. We provide results for different factors in PUG-ImageNet, finding that ConvNeXt outperforms ViT on nearly all factors. This suggests ConvNeXt is better than ViT on synthetic data, with a smaller gap for CLIP models which have lower accuracy than supervised models, likely related to inferior original ImageNet accuracy.

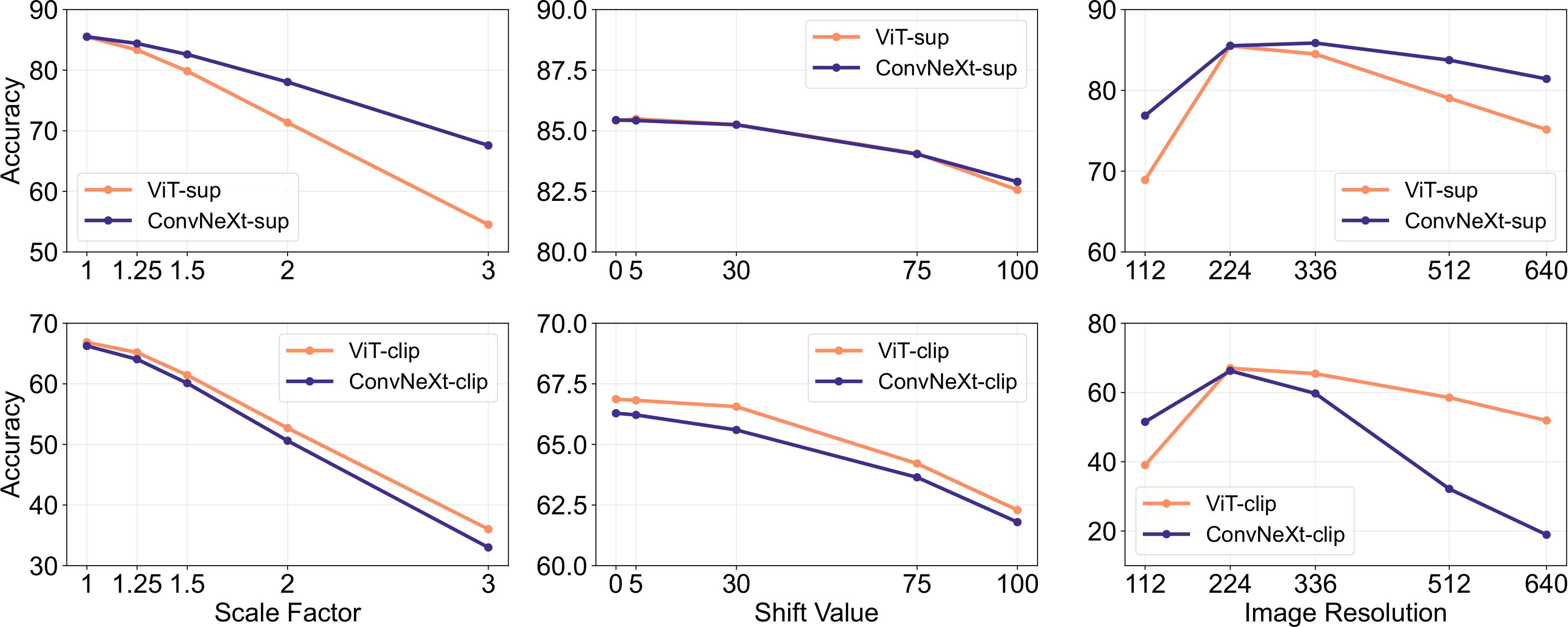

Transformation Invariance

Transformation invariance refers to a model’s ability to produce consistent representations unaffected by input transformations that preserve semantic meaning, such as scaling or shifting. This property enables models to generalize well across different but semantically similar inputs. Our methodology involves resizing images for scale invariance, shifting crops for positional invariance, and adjusting resolution with interpolated positional embeddings for the ViT model. We assess invariance to scale, shift, and resolution on ImageNet-1K by varying crop scale/location and image resolution. ConvNeXt outperforms ViT under supervised training. Overall, models are more robust to shift than scale/resolution transforms. For applications requiring high robustness to scale, shift and resolution, our results indicate supervised ConvNeXt could be the best choice.

Conclusions

We found that each model can have its own distinct strengths. This suggests that model selection should depend on the target use cases, as standard performance metrics may overlook key task-specific nuances. In addition, many existing benchmarks are derived from ImageNet which biases the evaluation. Developing new benchmarks with different data distributions will be crucial for evaluating models in a more real-world representative context.

ConvNet vs Transformer

- We found the superior performance of supervised ConvNeXt over supervised ViT on many benchmarks: it is better calibrated, more invariant to data transformations, and demonstrates better transferability and robustness.

- ConvNeXt outperforms ViT on synthetic data.

- ViT has a higher shape bias.

Supervised vs CLIP

- Despite CLIP models being better at transferability, supervised ConvNeXt shows a competitive performance on this task. This showcases the potential of supervised models.

- Supervised models are better at robustness benchmarks, likely because these are ImageNet variants.

- CLIP models have a higher shape bias and make fewer classification mistakes relative to their ImageNet accuracy.

Contact

ki [dot] vishniakov [at] gmail [dot] com

Citation

@article{vishniakov2023convnet,

title={ConvNet vs Transformer, Supervised vs CLIP: Beyond ImageNet Accuracy},

author={Kirill Vishniakov and Zhiqiang Shen and Zhuang Liu},

year={2023},

eprint={2311.09215},

archivePrefix={arXiv},

primaryClass={cs.CV}

}